Estimated reading time: 9 minutes

Few things are as persistent as a myth. Some myths were once true, while others seem to come outta nowhere. An example of the former is the one that claims it’s best to inject medicine directly into someone’s heart in an emergency. It was perpetuated in the Pulp Fiction scene where Uma Thurman’s character is revived after an accidental overdose. But that practice fell out of favor way back in the ’70s once doctors learned of simpler, safer, more effective methods. An example of the latter myth type is the story of how late comedian Patrice O’Neal got his start. Legend has it he heckled a comedian who, then, dared him to get on stage and try it himself. So, he did. . . and killed! But the documentary of his life detailed a much more pedestrian genesis story which deflated many of his fans. Systems performance myths are no different.

Some systems performance myths were true once upon a time. Others seem to have been pulled outta thin air. This article outlines 5 of these stubborn performance myths, some that were once true and some that never were. Along the way, I’ll try to debunk them once and for all. We’ll list them in reverse order of prevalence based on my personal experience.

Table of contents

Myth #5: Tool Expertise == Performance Expertise

“Success is not about the tools. No one ever asked Hemingway what kind of pencil he used.”

— Chris Brogan

Do you think the first group of writers to master Microsoft Word became better writers than the ones who used typewriters? Or the first group of journalists to use smart phones/tablets were better than the ones using yellow notepads? The notion is completely absurd. Writers and journalists become experts through perfecting their craft, and productivity tools only enhance their innate abilities.

Yet on LinkedIn and Twitter, engineers describe themselves as “JMeter Expert” or “Intel VTune Master”. The implication is that tool mastery equates to mastery of performance engineering as a whole. Nothing could be further from the truth.

Systems Performance experts hold a firm grasp of the fundamentals. They understand Queuing Theory, Systems Architecture, CPU microarchitecture, OS/kernel/IO basics, Benchmark Design, Statistical Analysis, etc. The tool an expert wields only enhances his hard-earned ability to slay performance dragons or unlock deeper insights.

In fact, knowledge of ballpark latency numbers for various operations (e.g., syscalls, context switches, random reads, etc.) allows experts to perform back-of-the-napkin math to detect when these tools produce spurious results. You don’t develop that kind of nose for foul reporting from just studying the tools themselves.

Lastly, when the sexy tool-of-the-day replaces the time-worn tool of yesteryear, an expert’s foundational knowledge transfers seamlessly while the “tool expert” finds himself in a precarious employment marketability situation.

This performance myth was never true. Devote more time to honing your knowledge of systems performance fundamentals while complementing it with tooling.

Myth #4: Memory Consumption Tracking Is Enough

You’re the owner of a new restaurant. As such, you monitor aspects of day-to-day operations to stay on top of staffing requirements. Over the past few weeks the restaurant only peaked at 50% capacity and there’s never been a line outside for tables. Therefore, you see no need to beef up staffing.

But then you notice a spate of negative Yelp reviews: dine-in customers complaining about order wait times. “What? How can that be?! We’re never more than 50% occupied and there’s never a line outside! How is this happening?” After discussions with staff, you learn that the restaurant attracts a bourgeoning, local foodie crowd. A persnickety bunch, they linger for hours ordering multiple courses throughout their stay, frequently sending orders back over minor quibbles. Other dine-in tables demand far less yet endure long waiting times due to the few tables of foodies overworking the kitchen. Staffing based solely on restaurant capacity left a major blind-spot in your planning.

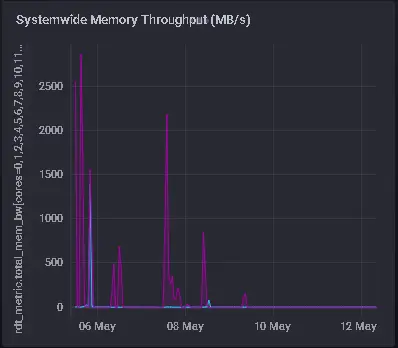

Likewise, there’s a myth that tracking only memory consumption is good enough for performance monitoring. To be honest, monitoring tools on the market perpetuate this myth. Most tools offer only global and per-process RAM utilization by default. They also track swapping behavior for RAM shortage detection, like the restaurant owner checks restaurant capacity or whether an outside queue forms. But what about checking for processes that monopolize the Memory Controller (MC) with a constant flood of requests? These rogue processes may only utilize 10% of available RAM, yet the R/W workload within that 10% may be *frantic*.

Remember, the MC is a shared resource just like that understaffed kitchen. If one process (or table) burdens that shared resource with a flood of R/W requests (or frequent food order demands), it impacts the performance of other processes traversing the same MC (like the other tables in the restaurant).

Now, I already know what you’re thinking. “Mark, a rogue application like that would expose itself via a sudden spike in CPU%, which I already monitor.” That rebuttal may’ve been more valid in the past. But now that customers no longer stand for crazy long-tail latencies, application developers have taken notice. Apps like ScyllaDB and RedPanda employ thread-per-core schemes whereby threads spin on assigned cores to avoid the latency of sleep/wakeup and context switching. This spinning keeps CPU% on those cores in the 90+ range even when idle. Trading applications in the HFT industry have always used this scheme. Therefore, CPU% is not the indicator it once was.

This is another example of a performance myth that was never true, though we’d never possessed nearly the MC monitoring capabilities that we do today. Make sure you’re tracking MC usage. You’ll be surprised how many odd performance anomalies this will uncover.

Myth #3: Sampling Profilers Work Great for Multithreaded Apps

Everyone in the industry has a favorite sampling profiler these days. They’re increasingly easy to use, impose minimal overhead, and offer all types of graphical UIs for intuitive navigation. Just fire one up and look at the descending order of functions imposing the most work on your CPU. Then you begin troubleshooting from the top of the list. Easy day at the office.

But hold on! That workflow only works for single-threaded apps. When you’re dealing with multithreaded applications, that descending order of high-CPU-usage functions can lead you on a bogus journey. In fact, the highest CPU consuming functions may run on threads which exert no effect on critical path performance whatsoever. Charlie Curtsinger and Emery Berger wrote about this profiler deficiency for multithreaded programs. And they devised a clever technique to address it: Causal Profiling.

Causal Profiling involves running multiple experiments during app runtime to determine the critical path source lines using a technique called “virtual speedups”. At the conclusion of these experiments, it produces causality estimates. For example, “optimizing line #38 in Function X by 5% will improve overall app performance by 22%.” And this is not just some pie-in-the-sky, fluff talk nonsense – it exists in tool form today. It’s called COZ, and it’s one of the most trusted tools in my personal toolbox.

I once used it at an engagement where COZ determined that the major chokepoint in a flagship app existed in a thread which the Dev Team swore had *zero* critical path impact. In the end, COZ was right.

Next time you’re profiling a multithreaded application, compare what you find with your favorite sampling profiler with findings from COZ. A few experiments like that will dispel this performance myth on its own.

Myth #2: CPU Clock Speed Is Paramount

To some of you this may seem like a no-brainer. But I’ve encountered this myth enough recently to place it 2nd on the list simply for its impressive longevity. On a team of 10 developers, at least one believes CPU A from Vendor A is faster than CPU B from Vendor B if CPU A has a faster clock. A few believe CPU E is faster than newer CPU G from the same vendor if CPU E has the faster clock. Because, after all, they both derive from the same vendor.

Years ago, I worked at a firm where the Lead Software Engineer of one group refused to upgrade to the latest Broadwell platform because it had a much lower clock speed than his older Sandy Bridge Workstation systems. Once I convinced him to try the Broadwell, the latency reduction impact on his trading algorithm absolutely stunned him.

This myth may have had a kernel of truth in the very early days of processors. But the advent of pipelined, superscalar CPUs with increasingly complex microarchitectures diminished clock speed into only a single factor in a laundry list of factors governing overall performance. The breakdown in Dennard Scaling makes this point even more relevant, thus the bigger push for more cores per CPU than for higher clock speed.

Wanna see how old this performance myth is? Here’s a short video from 2001 addressing what was then called “the Megahertz Myth.” Yeah, you read that right. . . *megahertz*.

Myth #1: Big O Complexity == Performance

“Throw the Structures and Algorithm books away. Look at research papers and measure. That’s all we can do. The books are kinda weirdly out of date.”

— Andrei Alexandrescu

Developers leave school understanding how to calculate and express in Big O notation the time complexity of an algorithm. This algo is constant (O(1)), this one is linear (O(N)), this one is logarithmic (O(log N)), and so on. Implicit in this Big O notation is a C constant which represents machine effects like CPU caches, branching effects, etc. And what’s the general guidance regarding that C constant from most Algorithm course books?

“When we use big-O notation, we drop constants and low-order terms. This is because when the problem size N gets sufficiently large, those terms don’t matter.”

And therein lies the issue: For a given problem, where is the demarcation where N is “sufficiently large”? Before that point, differences in that C constant can make an O(N²) algo outperform a competing O(N log N) algo. College courses must stress more the impact of machine effects like CPU caching and the benefits of profiling for them.

A particularly enjoyable presentation illustrating this is Alexandrescu’s CppCon 2019 Keynote linked below. In it, he chronicles his attempts at improving Insertion Sort over hundreds of elements. Though he succeeded in materially reducing the number of operations in his first attempts, these surprisingly resulted in runtime *regressions*:

On modern CPUs, doing *more* work (even if eventually throwing it away) can result in better performance despite algorithmic complexity. This myth that algorithmic complexity analysis equals performance analysis needs to go, and go soon!

Join the Myth-Buster Battle

While these 5 systems performance myths comprise the most common I’ve encountered recently, perhaps you’ve encountered different stubbornly persistent myths. Don’t turn a blind eye! Dispel them before they travel like an aerosol virus and infect the next gen of techies. And I’ll let you in on a little secret. . . some of the biggest super spreaders will be your closest, most experienced colleagues!

“The call is coming from *inside* the house!!!”

One Response