Estimated reading time: 7 minutes

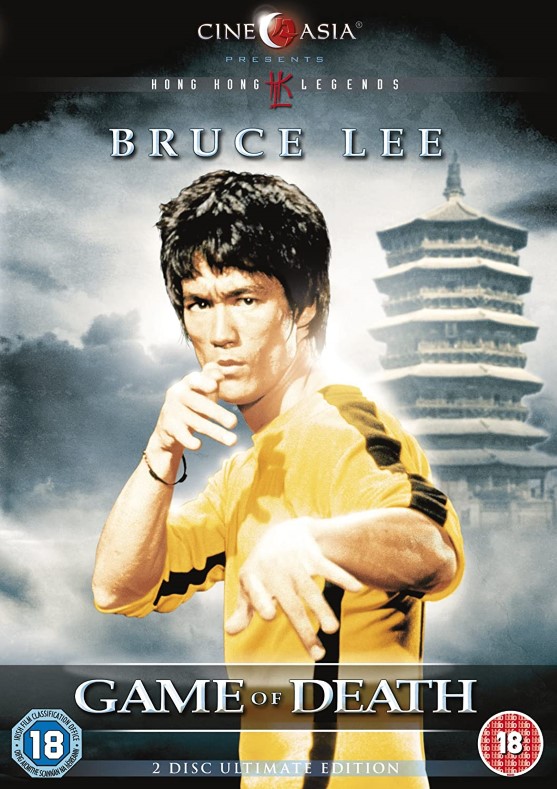

As a kid I might’ve been willing to fight you for criticizing any Bruce Lee movie. As an adult with a more refined fanaticism, I can now admit that his final film, Game of Death, sucks. In Lee’s defense, though, he died during filming, so salvaging the movie required major plot point revisions by producers. But what remained of his original script – namely, a protagonist facing increasingly formidable henchmen at various floors of a pagoda in the finale – influenced scores of movies and multi-level arcade games for decades since. Just like the Mortal Kombat style games his posthumous film inspired, low latency optimization is its own multi-level game. Who are the henchmen at each level in this Game of Low Latency, and what challenges does each pose?

Table of contents

LATENCY LEVEL 1 – The IT Industry

Your first foe in this Game of Low Latency is “The IT Industry”, and he wants to shove all things high-throughput down your throat. He’ll have you struggling against default, throughput-based settings in everything from servers and network switches to OSes and opensource/COTS applications. What’s worse, ~90% of available “tuning best practices” guidelines assume high-throughput as the reader’s chief aim.

How many times have default TCP settings, like Nagle or Delayed ACK, bitten you in your quest for low latency? NICs that holds off alerting your app about the first packet’s arrival just so they can buffer additional incoming packets? Server vendor guidelines that advocate wide Memory Channel interleaving? Monitoring tools that report little more than an average and standard deviation as if system latency ever follows a normal distribution? The list goes on and on.

LATENCY LEVEL 2 – The Hardware

“The Hardware” notices your triumphant arrival to Level 2 and laughs derisively. He hurls at you poison-tipped System Management Interrupts (SMI), which suspend the OS to run firmware for various management purposes. These SMIs will rob your application of hundreds of microseconds to as much as 100ms of processing time.

As core speeds increase, core counts grow, and components become more densely packed, power and thermal throttling features will proliferate. Everything from the motherboard and CPU to PCIe devices and DDR DIMMs conspires to take naps on the job. Some years back, for example, our server vendor accidentally shipped us systems with DIMMs configured for Opportunistic Self-Refresh. So after brief quiet periods a DIMM would enter REFRESH mode autonomously, during which CPU requests couldn’t be serviced. CPUs and PCIe devices look for similar quiet periods to plunge into deeper C-states and ASPM L-states, respectively. Disabling these features requires navigating a maze of BIOS, kernel, and OS settings to get it right. I swear it feels like each year adds a whole new batch of power/thermal management features to watch for.

LATENCY LEVEL 3 – The Kernel

You’re feeling less tentative now as you ascend to meet “The Kernel” at Game of Low Latency Level 3. Unlike “The Hardware” at Level 2, this henchman isn’t smiling – he’s all business. He throws all kinds of throughput-enhancing darts at ya, and comes up with even more ammunition with every release. NAPI, automatic NUMA balancing, Transparent Huge Pages (THP), etc. Not to mention a host of kernel workqueue threads for all types of background tasks. Oh, that all sounds fine and dandy until you realize these often hamper your low latency efforts in surprising ways.

With plummeting processing time budgets for handling packets at 25/40/100Gb NIC rates, the kernel network layer simply can’t address your low latency networking needs. And the Linux CFS scheduler may be fair, but “fair” is anathema in the low latency space where priority access is valued. And don’t even get me started on all the device, timer tick, and function call interrupts you gotta minimize.1See /proc/interrupts for a full listing of interrupts targeting your application core

LATENCY LEVEL 4 – The Application

You did it! You’ve reached the Big Boss, “The Application”, at Game of Low Latency Level 4! While you felt anxious at previous levels, your command of C++ has you brimming with confidence against this final foe. You’ve carefully chosen lock-free data structures and algorithms with Mechanical Sympathy in mind. Your L1d cache hit and branch prediction rates are optimal. But a wise warrior never underestimates his enemy – and, as it turns out, this enemy has quite the arsenal.

Weapon #1: Address Space

Did you pre-allocate and pre-fault all the memory you’ll need? Are your STL containers backed by that pre-allocated memory?2For example, by using Polymorphic Allocator Resource (PMR) versions of STL containers – https://www.bfilipek.com/2020/06/pmr-hacking.html If your application is multi-threaded, did you pre-fault the thread stacks, too? Failure to consider these will result in runtime minor page faults which cost ~1 μs each, a relative eternity.

Does your allocator release memory back to the OS during runtime, perhaps after many RAII-induced free or delete calls? If so, and your app is multi-threaded, you’ll conjure the latency-spiking wrath of Translation Lookaside Buffer (TLB) Shootdowns. This kind of interrupt is bad on receiving cores, but even worse on the sending core. It doesn’t even matter if it’s your designated background thread doing all the expensive allocations/deallocations. All those threads share the same address space, so they all end up paying the price.

And if you’re page faulting *and* releasing memory back to the OS at runtime, prepare to deal with hiccups stemming from mmap_sem kernel lock contention. Oh, and here’s a fun fact about that mmap_sem lock: Reads against the procfs memory map files3/proc/<PID>/maps, /proc/<PID>/smaps, /proc/<PID>/numa_maps of a given process acquires that lock in read mode for said process. Can you imagine a harmless Monitoring Tool impacting the latency of your application just by reporting its memory usage??? It’s a dirty one, this Game of Low Latency.

Weapon #2: CPU Cache

False sharing is another common latency trap for multi-threaded apps, wherein multiple threads reference different objects that reside in the same 64-byte cache line. In Universal Scalability Law (USL), this relates to the Coherence factor (β > 0) which imposes a retrograde performance effect. In other words, it’s a low latency/scalability killer. Intel VTune Pofiler and perf c2c are go-to weapons for such occasions.

Does your application include infrequently executed functions which nonetheless must fire quickly when called into action? Better employ cache-warming techniques to keep relevant instructions and data resident in the i-cache and d-cache, respectively.

While your app threads may be pinned to separate cores with distinct L1/L2 caches, contention will remain at the shared LLC level. And these days, chip manufacturers partition the LLC across a mesh or a network of core complexes.4Referred to as Non-uniform Cache Access (NUCA) So access latency will vary depending on how far a core is from a given LLC slice.

Weapon #3: Compiler Flags

You probably never think twice about compiling your application with -O3 even though studies reveal an ongoing tug-of-war between -O2 and -O3 performance among compilers. And how often might you be suffering from the ~10μs period of lower IPC whenever even light AVX2/AVX512 instructions execute in your code? Now you might be thinking, “But I don’t use any wide vector instructions in my code?” Well, if you’re compiling with -march=native then don’t be so sure. Look at the generated assembly, or compare runtime performance after appending the -mprefer-vector-width=128 build option. It’s an eye-opener.5But things seem to be improving on the AVX2/AVX512 latency front, starting with Intel Ice Lake – https://travisdowns.github.io/blog/2020/08/19/icl-avx512-freq.html

NOTE: We discuss a lot of these application-level challenges and methods of overcoming them in our book Performance Analysis and Tuning on Modern CPUs.6Paid affiliate link

Game (Not) Over

This article only scratches the surface, as there is so much more to consider. But hopefully I’ve shed some light on the differences between throughput vs. latency optimization. In future articles, I’ll delve more deeply into each of the topics introduced here either through war stories or demos.

We can never truly win this multi-level Game of Low Latency with any finality the way Bruce’s character does in Game of Death. The landscape shifts too much with every new technology, chip release, kernel update, or programming language enhancement for any closure of that sort. But maintaining a culture of knowledge-sharing, open-minded experimentation, and Active Benchmarking will at least provide us Infinite Lives to continue playing.

- 1See /proc/interrupts for a full listing of interrupts targeting your application core

- 2For example, by using Polymorphic Allocator Resource (PMR) versions of STL containers – https://www.bfilipek.com/2020/06/pmr-hacking.html

- 3/proc/<PID>/maps, /proc/<PID>/smaps, /proc/<PID>/numa_maps

- 4Referred to as Non-uniform Cache Access (NUCA)

- 5But things seem to be improving on the AVX2/AVX512 latency front, starting with Intel Ice Lake – https://travisdowns.github.io/blog/2020/08/19/icl-avx512-freq.html

- 6Paid affiliate link